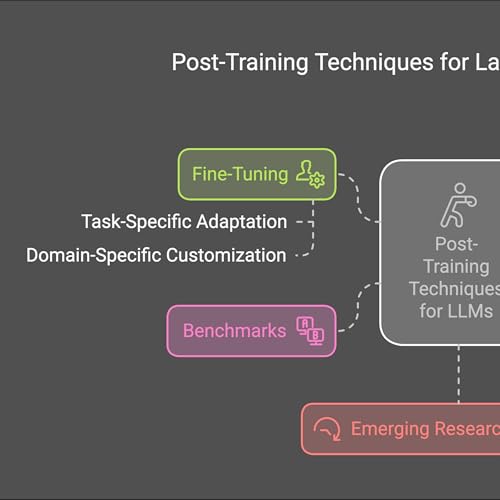

LLM Post-Training: Fine-Tuning and Alignment Techniques

Failed to add items

Add to cart failed.

Add to wishlist failed.

Remove from wishlist failed.

Follow podcast failed

Unfollow podcast failed

-

Narrated by:

-

Written by:

About this listen

This document provides a comprehensive survey of post-training techniques for large language models (LLMs), which build upon the foundation laid by pretraining. The authors categorize these methods into fine-tuning, reinforcement learning, and test-time scaling, exploring how each refines LLMs for improved reasoning, accuracy, and alignment with human values. The survey analyzes various algorithms and strategies within these categories, such as different reinforcement learning approaches like PPO and DPO, and scaling techniques like chain-of-thought prompting and beam search. Furthermore, it discusses relevant benchmarks for evaluating the effectiveness of these post-training methods and highlights emerging research directions in the field.